There are various ready-made implementations of Kubernetes (K8S) cluster, for example:

- Minikube – a ready-made cluster that can be deployed on a single computer. A good way to get acquainted with Kubernetes.

- Kubespray – a set of Ansible roles.

- Ready-made cloud clusters, such as AWS, Google Cloud and so on.

Using one of the ready-made implementations is a quick and reliable way to deploy the Docker container orchestration system. However, we will consider manually creating a Kubernetes cluster of 3 nodes – one master (for management) and two worker nodes (for running containers).

To do this, we will perform the following steps:

System preparation

These actions are performed on all nodes of the future cluster. This is necessary to meet the software system requirements for our cluster.

System configuration

- Set node names. To do this, execute the commands on the corresponding servers:

hostnamectl set-hostname kubmaster1.automationtools.me

hostnamectl set-hostname kubworker1.automationtools.me

hostnamectl set-hostname kubworker2.automationtools.meIn this example, we will set the name kubmaster1 for the master node and kubworker1 and kubworker2 – for the first and second worker nodes, respectively. Each command is executed on its own server.

Our servers need to be accessible by specific names. To achieve this, we need to add corresponding A-records to the DNS server. Alternatively, we can open the hosts file on each server:

nano /etc/hostsAnd we add entries like:

192.168.0.5 kubmaster1.automationtools.me kubmaster1

192.168.0.6 kubworker1.automationtools.me kubworker1

192.168.0.7 kubworker2.automationtools.me kubworker2- Install the necessary components – additional packages and utilities. First, let’s update the list of packages and the system itself:

apt update && apt upgradePerforming package installation:

apt install curl apt-transport-https git iptables-persistentWhere:

- git: utility for working with GIT. Needed for downloading files from a git repository.

- curl: utility for sending GET, POST, and other requests to an http server. Needed for downloading the Kubernetes repository key.

- apt-transport-https: allows access to APT repositories via https protocol.

- iptables-persistent: utility for saving rules created in iptables (not required, but increases convenience).

During the installation process, iptables-persistent may ask for confirmation to save firewall rules – refuse.

- Disable the swap file. Kubernetes will not start with it.

Run the command to disable it once:

swapoff -aTo prevent swap from appearing after server reboot, open the following file for editing:

nano /etc/fstabAnd we comment the line:

#/swap.img none swap sw 0 0- Load additional kernel modules.

nano /etc/modules-load.d/k8s.confbr_netfilter

overlay- The br_netfilter module extends the capabilities of netfilter (learn more); overlay is required for Docker.

Let’s load the modules into the kernel:

modprobe br_netfilter

modprobe overlayChecking that the data modules are working:

lsmod | egrep "br_netfilter|overlay"We should see something like:

overlay 114688 10

br_netfilter 28672 0

bridge 176128 1 br_netfilter- Let’s change the kernel parameters.

Create a configuration file:

nano /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1- net.bridge.bridge-nf-call-iptables controls the ability to process traffic through the bridge in netfilter. In our example, we allow this processing for IPv4 and IPv6.

Apply the parameters with the following command:

sysctl --systemFirewall

We create different sets of rules for the master node and the worker.

By default, the firewall on Ubuntu is configured to allow all traffic. If we are setting up our cluster in a test environment, firewall configuration is not necessary.

- On the master node (Control-plane)

Execute the following command:

iptables -I INPUT 1 -p tcp --match multiport --dports 6443,2379:2380,10250:10252 -j ACCEPT- In this example, we are opening the following ports: 6443 – connection for management (Kubernetes API). 2379:2380 – ports for interaction between the master and workers (etcd server client API). 10250:10252 – working with kubelet (API, scheduler, controller-manager accordingly).

To save the rules, execute the command:

netfilter-persistent save- On the worker node:

For container nodes, we open the following ports:

iptables -I INPUT 1 -p tcp --match multiport --dports 10250,30000:32767 -j ACCEPT- *where:*10250 – connection to kubelet API. 30000:32767 – default working ports for connecting to pods (NodePort Services).

Save the rules using the command:

netfilter-persistent saveInstalling and configuring Docker

Install Docker on all cluster nodes using the following command:

apt install docker docker.ioAfter installation, we allow the Docker service to start automatically:

systemctl enable dockerCreating a file:

nano /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}- For us, the cgroupdriver setting is important and it should be set to systemd. Otherwise, when creating a Kubernetes cluster, a warning will be issued. Although this does not affect the ability to work, we will try to deploy without errors and warnings from the system.

And then we restart docker:

systemctl restart dockerInstalling Kubernetes

We will install the necessary components from the repository. Let’s add its key for digital signature:

curl -s <https://packages.cloud.google.com/apt/doc/apt-key.gpg> | sudo apt-key add -Let’s create a file with the repository configuration:

nano /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial mainLet’s update the package list:

apt updateInstalling packages:

apt install kubelet kubeadm kubectl- Where: kubelet is a service that runs on each node of the cluster and monitors the health of the pods. Kubeadm is a utility for managing the Kubernetes cluster. Kubectl is a utility for sending commands to the Kubernetes cluster.

The normal operation of the cluster heavily depends on the version of the installed packages. Therefore, uncontrolled updates can lead to a loss of functionality of the entire system. To prevent this from happening, we prohibit the update of installed components.

apt-mark hold kubelet kubeadm kubectlInstallation completed – you can now run the command:

kubectl version --client --output=yaml…and see the installed version of the program:

clientVersion:

buildDate: "2023-01-11T12:33:16Z"

compiler: gc

gitCommit: aef86a93758dc3cb2c658dd9657ab4ad4afc21cb

gitTreeState: clean

gitVersion: v1.24.3

goVersion: go1.18.3

major: "1"

minor: "24"

platform: linux/amd64

kustomizeVersion: v4.5.4Our servers are ready for creating a cluster.

Creating a cluster

Let’s consider the process of setting up the master node (control-plane) and attaching two worker nodes to it separately.

Setting up the control-plane (master node)

Execute the command on the master node:

kubeadm init --pod-network-cidr=10.244.0.0/16- This command will perform initial setup and preparation for the main cluster node. The pod-network-cidr flag sets the address of the internal subnet for our cluster.

The execution will take a few minutes, after which we will see something like:

Your Kubernetes control-plane has initialized successfully!

...

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.15:6443 --token f7sihu.wmgzwxkvbr8500al \\

--discovery-token-ca-cert-hash sha256:6746f66b2197ef496192c9e240b31275747734cf74057e04409c33b1ad280321- This command (highlighted in yellow) should be entered on worker nodes to join them to our cluster. You can copy it, but later we will generate this command again.

Create a KUBECONFIG variable in the user’s environment to specify the path to the kubernetes configuration file:

export KUBECONFIG=/etc/kubernetes/admin.confTo avoid having to repeat this command every time you log in to the system, open the file:

nano /etc/environmentAnd we add a line to it:

export KUBECONFIG=/etc/kubernetes/admin.confYou can view the list of cluster nodes using the command:

kubectl get nodesAt this stage, we should only see the master node:

NAME STATUS ROLES AGE VERSION

kubmaster1.automationtools.me NotReady <none> 10m v1.20.2To complete the setup, you need to install CNI (Container Networking Interface) – in my example it’s flannel:

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.ymlCluster control node is ready to operate.

Setting up a worker node

We can use the command obtained after initializing the master node to join a worker node or enter it manually (on the first node):

kubeadm token create --print-join-commandThis command will show us the request to join a new node to the cluster, for example:

kubeadm join 192.168.0.15:6443 --token f7sihu.wmgzwxkvbr8500al \\

--discovery-token-ca-cert-hash sha256:6746f66b2197ef496192c9e240b31275747734cf74057e04409c33b1ad280321Copy it and use it on two of our nodes. After the team’s work is completed, we should see:

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.On the master node, we enter:

kubectl get nodesWe should see:

NAME STATUS ROLES AGE VERSION

kubmaster1.automationtools.me Ready control-plane,master 18m v1.20.2

kubworker1.automationtools.me Ready <none> 79s v1.20.2

kubworker2.automationtools.me NotReady <none> 77s v1.20.2- Note that the node k8s-worker2 has a status of NotReady. This means that the configuration is still being executed and you need to wait. Usually, within 2 minutes, the status changes to Ready.

Our cluster is ready for work. Now you can create pods, deployments, and services. Let’s look at these processes in more detail.

Pods

Pods are an indivisible entity of an object in Kubernetes. Each Pod can include several containers (minimum, 1). Let’s consider several examples of how to work with pods. All commands are executed on the master.

Creation

Pods are created using the kubectl command, for example:

kubectl run nginx --image=nginx:latest --port=80- In this example, we are creating a pod named nginx that will use the latest version of the nginx Docker image, and our pod will listen to requests on port 80.

To get network access to the created pod, create a port-forward with the following command:

kubectl port-forward nginx --address 0.0.0.0 8888:80In this example, requests to the Kubernetes cluster on port 8888 will be directed to port 80 (which we used for our pod).

The kubectl port-forward command is interactive. We only use it for testing purposes. To forward ports in Kubernetes, we use Services – this will be discussed below.

It is possible to open a browser and enter the address http://<master IP address>:8888 – a welcome page for NGINX should open.

Viewing

You can get a list of all pods in the cluster using the command:

kubectl get podsFor example, in our example we should see something like:

NAME READY STATUS RESTARTS AGE

nginx 1/1 Running 0 3m26sYou can view detailed information about a specific pod using the command:

kubectl describe pods nginxRunning commands inside a container

We can run a single command inside a container, for example, using the following command:

kubectl exec nginx -- dateIn this example, the command date will be executed inside the nginx container.

We can also connect to the container’s command line using the command:

kubectl exec --tty --stdin nginx -- /bin/bash- Note that not all container images have the bash shell installed, for example, images based on alpine. If we get the error error: Internal error occurred: error executing command in container, we can try using sh instead of bash.

Deletion

To delete a pod, enter:

kubectl delete pods nginxUsing Manifests

In a production environment, managing pods is done through special files that describe how the pod should be created and configured – manifests. Let’s consider an example of creating and applying such a manifest.

We will create a YAML file:

nano manifest_pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: web-srv

labels:

app: web_server

owner: automationtools

description: web_server_for_site

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

- containerPort: 443

- name: php-fpm

image: php-fpm:latest

ports:

- containerPort: 9000

- name: mariadb

image: mariadb:latest

ports:

- containerPort: 3306In this example, a pod named web-srv will be created; this pod will deploy 3 containers – nginx, php-fpm, and mariadb based on respective images.

Labels or metadata have significant importance for Kubernetes objects. It is crucial to describe them, and later, these labels can be used for configuring services and deployments.

To apply the manifest, execute the command:

kubectl apply -f manifest_pod.yamlWe should see the response:

pod/web-srv createdView pods using the following command:

kubectl get podsWe should see:

NAME READY STATUS RESTARTS AGE

web-srv 3/3 Ready 0 3m11s- For Ready we can see 0/3 or 1/3 – this means that the containers inside the pod are still being created and we need to wait.

Deployments

Deployments allow you to manage instances of pods. They control their recovery and load balancing. Let’s consider an example of using Deployments in Kubernetes.

Creation

We create a Deployment using the following syntax:

kubectl create deploy <deployment name> --image <image to be used>For example:

kubectl create deploy web-set --image nginx:latest- With this command we will create a deployment named web-set; we will use nginx:latest as the image.

Viewing

You can view the list of deployments using the command:

kubectl get deployFor a specific deployment, we can view a detailed description as follows:

kubectl describe deploy web-set- In this example, we will look at the description of a deployment named web-set.

Scaling

As mentioned above, a deployment can balance the load. This is controlled by the scaling parameter:

kubectl scale deploy web-set --replicas 3- In this example, we specify for our previously created deployment to use 3 replicas – meaning Kubernetes will create 3 instances of the containers.

We can also configure automatic load balancing:

kubectl autoscale deploy web-set --min=5 --max=10 --cpu-percent=75In this example, Kubernetes will create 5 to 10 container instances – adding a new instance will occur when the processor load exceeds 75% or more.

You can view the created load balancing parameters using the following command:

kubectl get hpaEditing

For our deployment, we can change the image used, for example:

kubectl set image deploy/web-set nginx=httpd:latest --record- By using this command for deploying the web-set, we will replace the nginx image with httpd; the ‘record’ flag will allow us to record the action in the change history.

If we used the ‘record’ flag, we can view the change history using the following command:

kubectl rollout history deploy/web-setDeployment can be restarted with the command:

kubectl rollout restart deploy web-setManifest

As with pods, we can use manifests to create deployments. Let’s look at a specific example.

Create a new file:

nano manifest_deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: web-deploy

labels:

app: web_server

owner: automationtools

description: web_server_for_site

spec:

replicas: 5

selector:

matchLabels:

project: myweb

template:

metadata:

labels:

project: myweb

owner: automationtools

description: web_server_pod

spec:

containers:

- name: myweb-httpd

image: httpd:latest

ports:

- containerPort: 80

- containerPort: 443

---

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: web-deploy-autoscaling

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: myweb-autoscaling

minReplicas: 5

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 75

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80- In this manifest, we will create a deployment and autoscaling. In total, we will get 5 instances of pods for deploying web-deploy, which can be scaled up to 10 instances. Adding a new instance will occur when the CPU load exceeds 75% or when the memory consumption exceeds 80%. Please note that uppercase letters or spaces should not be used in names and tags.

To create objects using our manifest, enter:

kubectl apply -f manifest_deploy.yamlWe should see:

deployment.apps/web-deploy created

horizontalpodautoscaler.autoscaling/web-deploy-autoscaling createdWeb-deploy and web-deploy-autoscaling objects have been created.

Deletion

To delete a specific deployment, use the command:

kubectl delete deploy web-setTo remove all deployments instead of specifying the deployment name, use the –all flag:

kubectl delete deploy --allAutoscaling criteria for a specific deployment can be removed with the command:

kubectl delete hpa web-setAutoscaling criteria can be deleted using the following command:

kubectl delete hpa --allObjects created using a manifest can be deleted using the command:

kubectl delete -f manifest_deploy.yamlServices

Services allow to provide network accessibility for deployments. There are several types of services:

- ClusterIP — mapping an address to deployments for connections within the Kubernetes cluster.

- NodePort — for external publication of deployment.

- LoadBalancer — mapping through external load balancer.

- ExternalName — mapping a service by name (returns the value of the CNAME record).

We will consider the first two options.

Binding to Deployments

Let’s try to create mappings for the previously created deployment:

kubectl expose deploy web-deploy --type=ClusterIP --port=80Where web-deploy is the deployment that we deployed using the manifest. The resource is published on the internal port 80. You can access the containers inside the Kubernetes cluster.

To create a mapping that will allow you to connect to the containers from an external network, execute the following command:

kubectl expose deploy web-deploy --type=NodePort --port=80This command differs from the command above only in the type of NodePort – for this deployment, a port for external connection will be assigned, which will lead to the internal one (in our example, 80).

Viewing

To view the services we have created, enter:

kubectl get servicesWe can see something like:

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

web-deploy NodePort 10.111.229.132 <none> 80:30929/TCP 21s- In this example, it is indicated that we have a service of type NodePort, and we can connect to the service via port 30929.

We can try opening a browser and entering http://<master-IP-address>:30929 – we should see the desired page (in our examples, either NGINX or Apache).

To view the list of services with selectors, use the following command:

kubectl get services -o wideDeletion

We delete the created service using the command:

kubectl delete services web-deploy- In this example, the service for deploying web-deploy will be removed.

You can remove all services with the command:

kubectl delete services --allManifest

Just like with pods and deployments, we can use manifest files. Let’s consider a small example.

nano manifest_service.yaml

apiVersion: v1

kind: Service

metadata:

name: web-service

labels:

app: web_server

owner: automationtools

description: web_server_for_site

spec:

selector:

project: myweb

type: NodePort

ports:

- name: app-http

protocol: TCP

port: 80

targetPort: 80

- name: app-smtp

protocol: TCP

port: 25

targetPort: 25- In this example, we will create a service that will connect to the deployment using the label project: myweb.

ConfigMaps

ConfigMap allows us to store configuration files in K8S and, if necessary, mount them to specific pods. Let’s look at the process of creating and using them.

Creation

We can use files, directories, command line values, or env files (in key-value format) as a data source. Let’s go through them in order.

- Config from file:

kubectl create configmap nginx-base --from-file=/opt/k8s/configmap/nginx.conf- In our example, we will create a configuration named nginx-base, and its value will be the contents of the file /opt/k8s/configmap/nginx.conf.

- From the directory:

kubectl create configmap nginx-base --from-file=/opt/k8s/configmap/- In this example, all files from the directory /opt/k8s/configmap will be included in the configuration of nginx-base.

- Values can be specified in the command:

kubectl create configmap nginx-spec --from-literal=hostname=automationtools.me --from-literal=cores=8- Using an env file:

kubectl create configmap nginx-properties --from-env-file=/opt/k8s/configmap/nginx.envView

Show a list of all configs created in k8s:

kubectl get configmapsDetailed information with content:

kubectl get configmaps -o yaml nginx-baseBinding to Containers

The values of our configurations can be mounted as files or system variables. We can apply them to both pods and deployments. Let’s consider an example of using it with a description in a manifest file.

- Mounting as a configuration file:

...

spec:

containers:

- name: myweb-nginx

image: nginx:latest

...

volumeMounts:

- name: config-nginx

mountPath: /etc/nginx

subPath: nginx.conf

volumes:

- name: config-nginx

configMap:

name: nginx-baseLet’s take a closer look at what we’ve configured:

- Note that we are using a manifest for either a Pod or a Deployment – we’re working with configuration for containers.

- We created a volume called config-nginx, which takes its value from the nginx-base configuration.

- We attached the created config-nginx to our container using the volumeMounts option. And with mountPath, we specify the path where the configuration will be mounted.

- We set nginx.conf as the destination file using the subPath option.

- Using for creating system variables:

...

spec:

containers:

- name: myweb-nginx

image: nginx:latest

...

envFrom:

- configMapRef:

name: nginx-spec- In this example, everything is a bit simpler – we specify using the directive envFrom that we need to create system variables from the nginx-spec config. To apply changes, enter:

kubectl apply -f manifest_file.yaml- where manifest_file.yaml is the file in which we made changes.

Deletion

You can delete the config using the following command:

kubectl delete configmap nginx-specIngress Controller

This instruction will not cover the work with Ingress Controller. We leave this point for independent study.

This application allows creating a load balancer that distributes network requests between our services. The order of network traffic processing is determined using Ingress Rules.

To install Ingress Controller Contour (among many controllers, it is easy to install and at the time of updating this instruction fully supports the latest version of the Kubernetes cluster), enter:

kubectl apply -f https://projectcontour.io/quickstart/contour.yamlInstalling a Web Interface

The web interface allows you to view information about the cluster in a convenient format.

Most instructions explain how to access the web interface from the same computer as the cluster (at 127.0.0.1 or localhost). However, we will consider setting up remote access, as this is more relevant for server infrastructure.

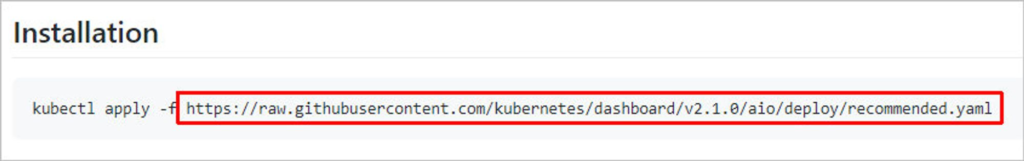

Go to the web interface page on GitHub and copy the link to the latest version of the yaml file:

- At the time of updating the instructions, the latest version of the interface was 2.7.0.

We download the yaml file using the following command:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml- where *https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml** is the link that we copied on the GitHub portal.

Open the downloaded file for editing:

nano recommended.yamlComment the lines for kind: Namespace and kind: Secret (there are several blocks with kind: Secret in the file – we need the one with name: kubernetes-dashboard-certs):

...

#apiVersion: v1

#kind: Namespace

#metadata:

# name: kubernetes-dashboard

...

#apiVersion: v1

#kind: Secret

#metadata:

# labels:

# k8s-app: kubernetes-dashboard

# name: kubernetes-dashboard-certs

# namespace: kubernetes-dashboard

#type: Opaque- We need to comment out these blocks, as we will have to manually configure these settings in Kubernetes.

Now in the same file, find kind: Service (which has name: kubernetes-dashboard) and add the lines type: NodePort and nodePort: 30001 (highlighted in red):

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard- Thus, we publish our service on an external address and port 30001. To connect to the web interface not through a local address, starting from version 1.17, it is mandatory to use an encrypted connection (https). For this, a certificate is required. In this instruction, we will generate a self-signed certificate – this approach is convenient for a test environment, but in a production environment, it is necessary to purchase a certificate or obtain it for free in Let’s Encrypt.

So, let’s create a directory where we will place our certificates:

mkdir -p /etc/ssl/kubernetesGenerate certificates with the following command:

openssl req -new -x509 -days 1461 -nodes -out /etc/ssl/kubernetes/cert.pem -keyout /etc/ssl/kubernetes/cert.key -subj "/C=RU/ST=SPb/L=SPb/O=Global Security/OU=IT Department/CN=kubernetes.automationtools.me/CN=kubernetes"- It is possible to leave the command parameters unchanged. The browser will still issue a warning about an invalid certificate since it is self-signed.

kubectl create secret generic kubernetes-dashboard-certs --from-file=/etc/ssl/kubernetes/cert.key --from-file=/etc/ssl/kubernetes/cert.pem -n kubernetes-dashboardActually, we did not use the setting in the downloaded file as we include it in the path parameters of the certificates we created ourselves. Now let’s create the remaining settings using the downloaded file:

kubectl create -f recommended.yamlWe will see something like:

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper createdLet’s create a setting for the admin connection:

nano dashboard-admin.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

labels:

k8s-app: kubernetes-dashboard

name: dashboard-admin

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: dashboard-admin-bind-cluster-role

labels:

k8s-app: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: dashboard-admin

namespace: kubernetes-dashboardCreating a configuration using the created file:

kubectl create -f dashboard-admin.yamlNow open your browser and go to the link https://<master IP address>:30001 – your browser will show a certificate error (if you are setting up according to the instructions and generated a self-signed certificate). Ignore the error and continue to load.

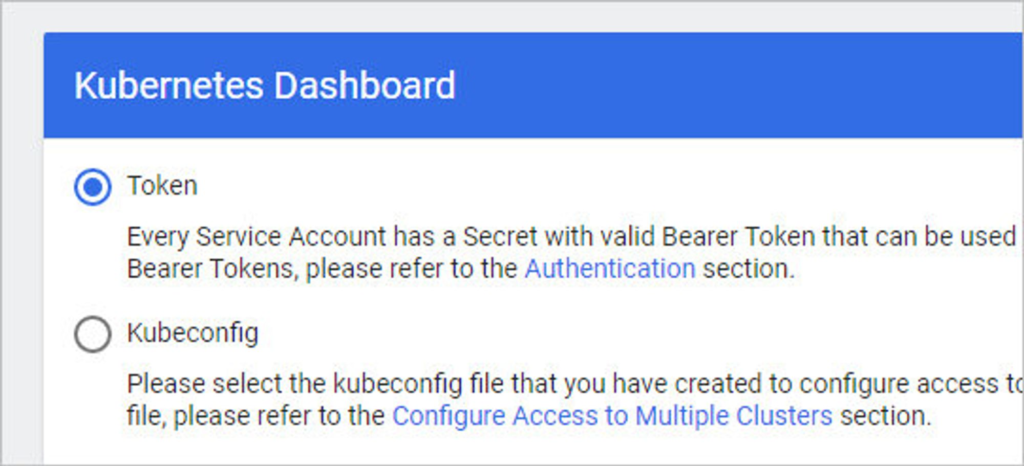

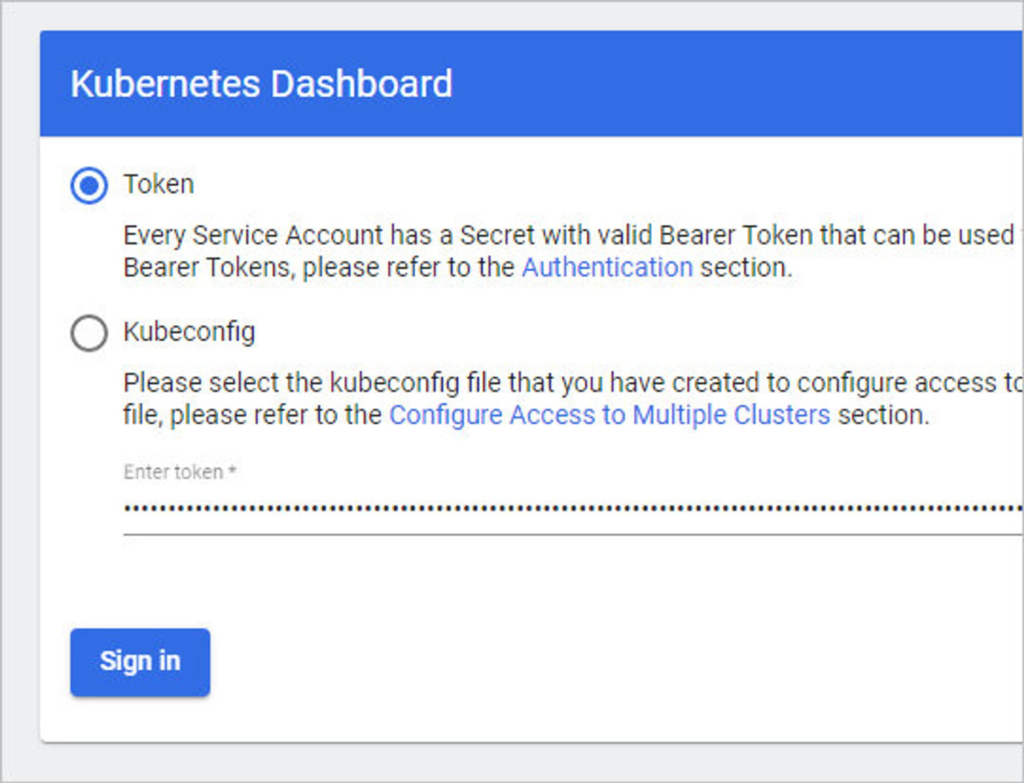

Kubernetes Dashboard will require authentication. To do this, you can use a token or a configuration file:

On the server, we enter a command to create a service account:

kubectl create serviceaccount dashboard-admin -n kube-systemLet’s create a binding for our service account with Kubernetes Dashboard:

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-adminNow with the command:

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')…we obtain a token for connection (highlighted in red):

Data

====

ca.crt: 1066 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IkpCT0J5TWF2VWxWQUotdHZYclFUaWwza2NfTW1IZVNuSlZSN3hWUzFrNTAifQ.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJkYXNoYm9hcmQtYWRtaW4tdG9rZW4tbnRqNmYiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC5uYW1lIjoiZGFzaGJvYXJkLWFkbWluIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQudWlkIjoiMzIwNjVhYmQtNzAwYy00Yzk5LThmZjktZjc0YjM5MTU0Y2VhIiwic3ViIjoic3lzdGVtOnNlcnZpY2VhY2NvdW50Omt1YmUtc3lzdGVtOmRhc2hib2FyZC1hZG1pbiJ9.wvDGeNiTCRBakDplO6PbdqvPH_W2EsBgJjZnTDflneP3cdXQv6VgBkI8NalplXDRF-lF36KbbC2hpRjbkblrLW7BemIVWYOznmc8kmrgCSxO2FVi93NK3biE9TkDlj1BbdiyfOO86L56vteXGP20X0Xs1h3cjAshs-70bsnJl6z3MY5GbRVejOyVzq_PWMVYsqvQhssExsJM2tKJWG0DnXCW687XHistbYUolhxSRoRpMZk-JrguuwgLH5FYIIU-ZdTZA6mz-_hqrx8PoDvqEfWrsniM6Q0k8U3TMaDLlduzA7rwLRJBQt3C0aD6XfR9wHUqUWd5y953u67wpFPrSAUsing the obtained token, enter it in the authorization panel:

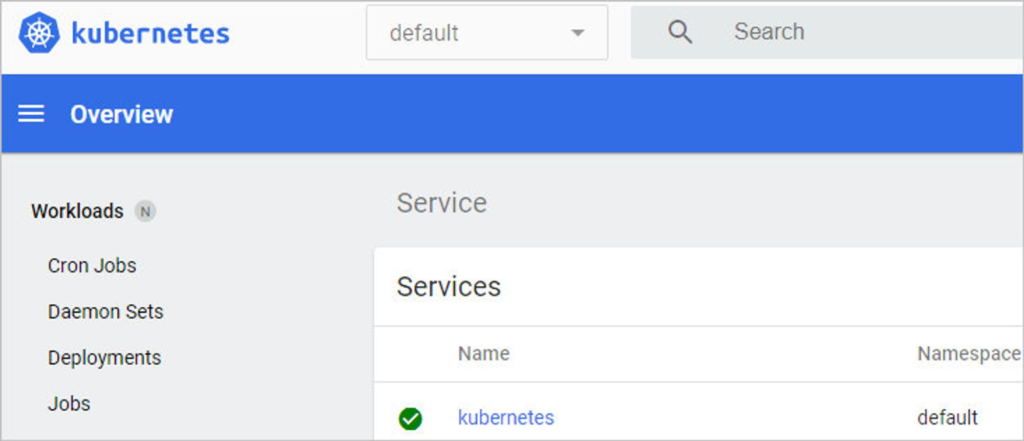

We should see the start screen of the management system:

Removing Nodes

To remove a node from our cluster, we enter 2 commands:

kubectl drain kubworker2.automationtools.me --ignore-daemonsets

kubectl delete node kubworker2.automationtools.me